This week Google released its artificial intelligence chatbot, Bard, and it will now compete with the AI chatbot, ChatGPT, released in late 2022.

The latter has quickly gained popularity amongst professionals and people in Education.

The chatbot sparks mixed reactions, while many believe that it helps with breaking data information into simple text, many believe that it can be used for plagiarism and that the information that it provides can be misleading or false.

Students at colleges and universities across the UK have been using ChatGPT for their homework and coursework.

Universities are also split on how to treat the chatbot, as the feedback has shown that in certain circumstances, ChatGPT has been quite helpful to students with learning difficulties such as dyslexia.

But, many have been using the different OpenAI platforms to simply write their essay or dissertation, and many Rusell Group universities are split on their approach.

Oxford University, Edinburgh and York have banned the use of any OpenAI and similar technology.

Cambridge University has stated they “recognise that artificially intelligent chatbots, such as ChatGPT, are new tools being used across the world”, and it will allow students to use ChatGPT as long as it is not for course work or exams.

The university’s policy states that “students must be the authors of their own work” and if they use an AI platform in their “own original work so would be considered a form of academic misconduct”.

Plagiarism detection services, such as Turnitin, have also laid out their plans for tackling text generated by artificial intelligence.

The American company is used by universities, schools and colleges across the world, and now they have introduced their AI Innovation Lab, to ” give a first-hand glimpse of what our technology (in development) can do”.

Turnitin can analyse text and text gathered with Open AI will be marked as plagiarism.

How would an academic mark an essay created by ChatGPT?

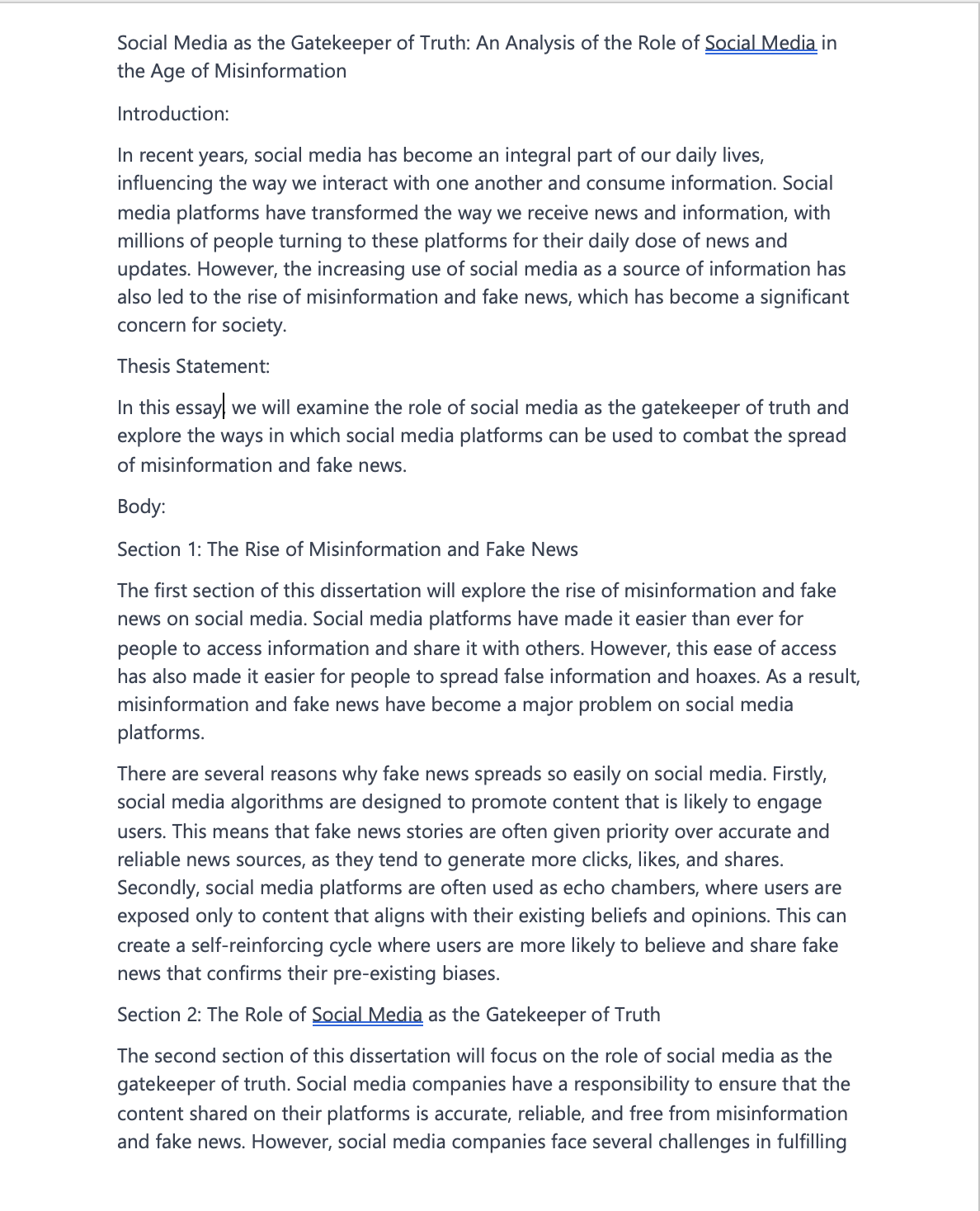

To see for ourselves how ChatGPT works, and to what extent can cover an essay topic, we tasked the platform to write an essay of 1000 words on the topic of “Social Media as the Gatekeeper of Truth: An Analysis of the Role of Social Media in the Age of Misinformation”.

It seemed that it was all going well until the system finished generating the text, which seemed to be less than 1000 words.

When called on the mistake, ChatGPT seemed very human-like and even politely apologised for making a mistake.

The system then generated another response, which in fact, was again fully compatible with the set task.

ChatGPT was able to hold a conversation, and to our surprise, it was very polite and human-like.

I asked Dan Thompson a senior technician at Falmouth University how he feels about it.

“It is quite an interesting concept, but I was scared about the way the ChatBot was being very human-like in its interaction.”

Andy Chatfield is a course leader and senior lecturer on the Journalism and Creative Writing course at Falmouth University.

“Social Media as the gatekeeper of truth” was the essay that we submitted to be graded by Andy Chatfield.

He agreed to grade an essay that we told him was written by an anonymous student at the university.

After thoroughly checking it and tells us that he is very surprised with the outcome.

Andy tells us that he would grade the essay a 38 out of 100, which would “be a fail, but a rather narrow fail”.

He believes that the strong points of the essay are the “punctuation and the grammar, the relevant themes and structures, but without referencing, the credibility is lost”, he said.

While ChatGPT can write very well, and essays have a good structure, of course, the platform does not show the resources or where it got its information.

Higher education institutions are mostly against it, but with technology evolving, it can be used for good.

While its future is unknown, we can assure you that this article was not written by ChatGPT.